Metadata Day 2020

By Paco Nathan

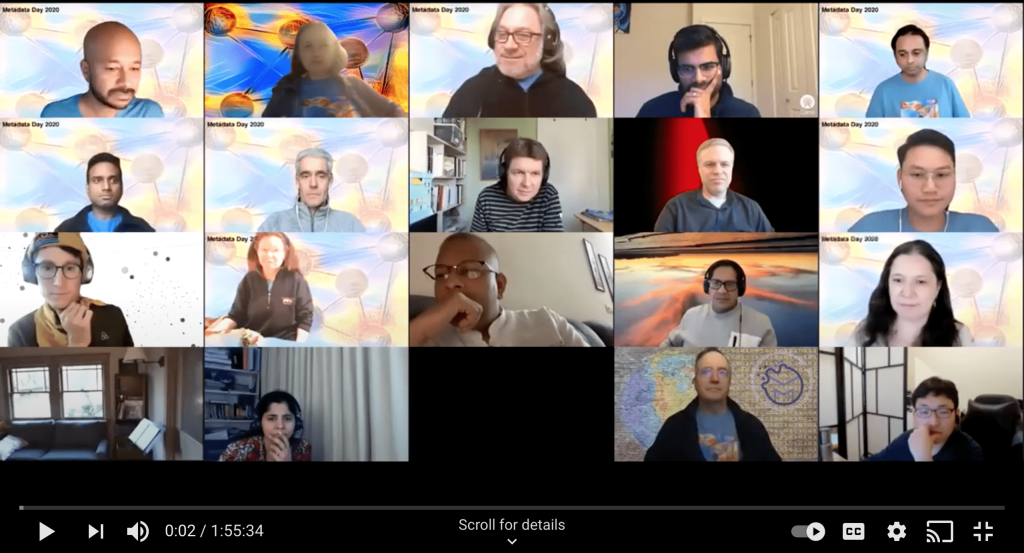

For a full day on December 14, 2020, LinkedIn sponsored a virtual workshop called Metadata Day, followed by a public online meetup called Metaspeak. View videos of the event on their website including a set of lightning talks provided by several of the speakers.

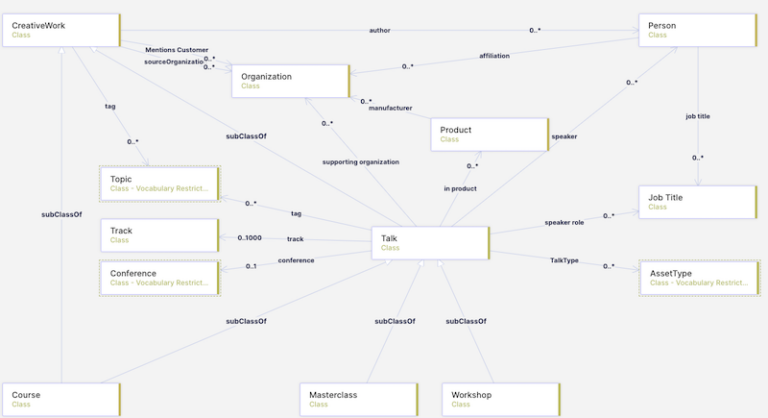

The gist is that circa 2018, the pending regulatory compliance for GDPR, CCPA, etc., drove urgency in enterprise organizations to have a better understanding and accountability about datasets and their use cases. Several firms, about a dozen, responded in strikingly similar ways – which signals that a kind of “design pattern” is emerging for how to handle these compliance challenges. Given how the datasets in question are connected to one or more use cases, and how each use case requires use of one or more datasets to train machine learning models, when auditors begin asking difficult questions about data provenance, the answers usually require graph-based approaches.

Of course, data governance is not a new topic – it’s been around for decades. Good products exist for managing data catalogs, tracking lineage/provenance, and so on. What changed was (1) urgency since 2018; and (2) a crucial role of graphs for understanding metadata. How are connected datasets getting used? Which team is responsible for producing and updating each dataset? Who manages the metadata? What customer products rely on a given dataset?

A 2017 paper out of UC Berkeley’s RISELab has explored these questions formally, “Ground: A Data Context Service” by Joseph M. Hellerstein, et al., CIDR ’17, which predicted the needs of what the industry would begin to recognize the following year. One of Prof. Hellerstein’s grad students, Shirshanka Das (co-organizer of Metadata Day 2020) joined LinkedIn and led their data compliance initiatives. In the article “DataHub: Popular metadata architectures explained” he describes the evolution of frameworks for this kind of use case, particularly regarding the DataHub platform developed at LinkedIn. Early solutions for data catalog involved relatively static, represented by database tables. A second generation followed that, providing metadata API services. However, more contemporary needs in enterprise (since GDPR, etc.) demand graph-based solutions.

Mark Grover held a similar role at Lyft, as product manager for the Amundsen project, coordinating their solutions for GDPR compliance needs. In the article “The data production-consumption gap”, Mark describes how Lyft recognized this as an organizational problem – where understanding enterprise use of connected datasets requires much more than a data catalog. There’s an inherent producer/consumer problem where HR data about the organization is needed (“Who’s responsible for curating XYZ?”) as well as data from operations and other departments. Graph-based representation allowed Lyft to collect and integrate metadata at a layer above the typical departmental data silos. Coupled with discovery and search UX, the experiences with Amundsen led to a stark discovery: “Over 30% of analyst time was wasted in finding and validating trusted data.” Lyft has 250 data analysts, so their 30% overhead translates to roughly $20M/year. Given this new “360 view” of their data assets, they also began to discover relations among their connected datasets that could open new business opportunities. Two major enterprise costs – compliance as a risk and the overhead of impedence across teams – were converted into business upside. Thirty firms now use the Amundsen platform, including ING Bank, Square, Instacart, Workday, Edmunds, etc. Mark also presented in the session “From discovering data to trusting data” at Knowledge Connexions.

Approximately a dozen tech companies reached similar realizations about GDPR-era data context and graph-based metadata approaches: LinkedIn, Lyft, Netflix, Airbnb, Uber, PayPal, and so on. Most of these efforts resulted in open source projects. Eugene Yan authored an excellent survey article “Data Discovery Platforms and Their Open Source Solutions” to compare/contrast these projects. The common ground is that these projects tend to combine graph-based overlays atop organizational data silos, along with serious investments in UX work, to provide local search plus recommender systems. This design affords understanding and trust about connected datasets, their use cases, and also about the relationships within an organization – since one lesson learned is that root problems in this area often require executives to intercede.

Another key observation: three tech start-ups have recently spun out based on this new category of data context, with these use cases and open source projects as a core. Venture capital investors describe this kind of situation in terms of category-defining companies. In other words, as certain start-ups begin to reshape the industry, equity growth typically follows.

Recognizing that an industry “moment” was in progress, LinkedIn organized the Metadata Day event to pull together the people leading these projects. The event was structured in two parts. First, an invitation-only virtual workshop (Foo Camp style) which split into one track for the systems implementations (i.e., issues about production and scale) and another track for articulating use case issues (i.e., expertise about metadata and knowledge graphs). The latter track included: Joe Hellerstein; Natasha Noy (Google) and Deborah McGuinness (RPI) who co-authored the famous “Ontology 101” paper while at Stanford; data governance pioneer Satya Sangani from Alation; Alejandro Saucedo from Seldon; plus metadata experts Daniella Lowenberg, Ted Habermann, Ian Mulvany. The workshop track discussions worked to articulate key points about their practices, quickly assembled into slide decks. Then the tracks reconvened to compare notes.

The second part of the Metadata Day event was a public meetup online, introduced with a keynote talk by LinkedIn CDO Igor Perisic. Both tracks presented their slide decks as panel discussions, open to much audience Q&A. View the full video of the #MetaSpeak Meet up.

One of the quintessential insights out of Metadata Day was articulated by Natasha Noy during our discussion about “What is a knowledge graph?” Natasha described that Google’s Knowledge Graph project, while it has well-known use within the search engine results, also fulfills another crucial to help cohere Google’s data context across many different data silos. To paraphrase Natasha, there was a realization that no “one data framework to rule them all” would ever happen, and instead it was important to decentralize services for translating schema and identifiers (e.g., “foreign keys”) across different data frameworks, allowing many teams to work independently on the kinds of producer/consumer challenges described above by LinkedIn and Lyft.

To be fair, this is certainly not a “solved problem”, nor are graph-based methods a panacea for resolving tech debt and cross-team impedance in enterprise IT. The challenges of data silos and enabling cross-team collaboration are organizational problems at their core which require Leadership to resolve. These data context platforms introduce open source solutions and related practices which can help greatly, when the corporate leadership and will to resolve is there first. Graph-based approaches are not a magic solution, though they are proving to be the more effective approach.

Also recognize that using knowledge graphs about metadata as an “overlay” to make an organization’s data silos cohere is not specific to Google – we’re hearing about this kind of practice across many enterprise organizations. Mainstream IT may not be referring to these kinds of practices as knowledge graphs – not yet, at least if one listens to Gartner Research and their “Hype Cycle”. However, the reality on the ground which Metadata Day proves is that Silicon Valley is driving and recognizing a category-defining moment.

From within the KGC community, there are two related articles:

- ”Introduction to Metadata Day” by Paco Nathan (before the event)

- “Rethinking the search over your dbt models” by Louis Guitton (reviewing the event)

To add more context, there have also been notable, long-term efforts in research towards similar ends which focused on delivering research results along the code and data required to reproduce them. That’s the academic research analog for what data science teams perform in industry. Notably, Carole Goble and colleagues at University of Manchester have pushed toward better tooling and practices for data context in the sciences, over the past two decades – e.g., the FAIR Guiding Principles for data governance. Their work has been foundational for semantic technologies. Another good example is the work by Jeremy Freeman at HHMI Janelia and their CodeNeuro, weaving a web of open source support for understanding and leveraging connected datasets in neuroscience worldwide. Project Jupyter’s Binder was a direct result. Part of Metadata Day’s pretext was to bring experts from the academic side who’ve had decades of work in metadata management and connected datasets, together with open source project leads from leading tech firms, to find and articulate common ground to help guide other organizations.

One thing to watch: as more companies turn to graph-based solutions for their data context, i.e., addressing their needs to understand and trust metadata about connected datasets, that creates pervasive opportunities for leveraging this new category of graph data with even more sophisticated graph-based approaches. Most of the solutions mentioned above are relatively light in terms of leveraging semantic technologies, inference, graph embedding, advanced approaches for interactive graph visualization at scale, etc. That absence was quite apparent from Metadata Day discussions on the “production” side.

One wonders what will happen when the new graph acolytes in Silicon Valley – and the many enterprise firms who follow their lead and adopt their open-source frameworks – begin to discover KGE, UMAP, SHACL, and so on. Considering the point by Natasha Noy at Google, the foundations for knowledge graph work are becoming widespread across enterprise IT; this new abstraction layer of discovery and trust, driven by regulatory compliance, may catalyze industry adoption of enterprise knowledge graphs. And that practice may happen years before Gartner Research decides to give it a name ?